Automation at work may be coming faster than employers realize.

Three huge names in the tech space announced new automation and AI capabilities for their enterprise clients this week, exemplifying AI’s explosive growth even amid rising concern for its impact on workers.

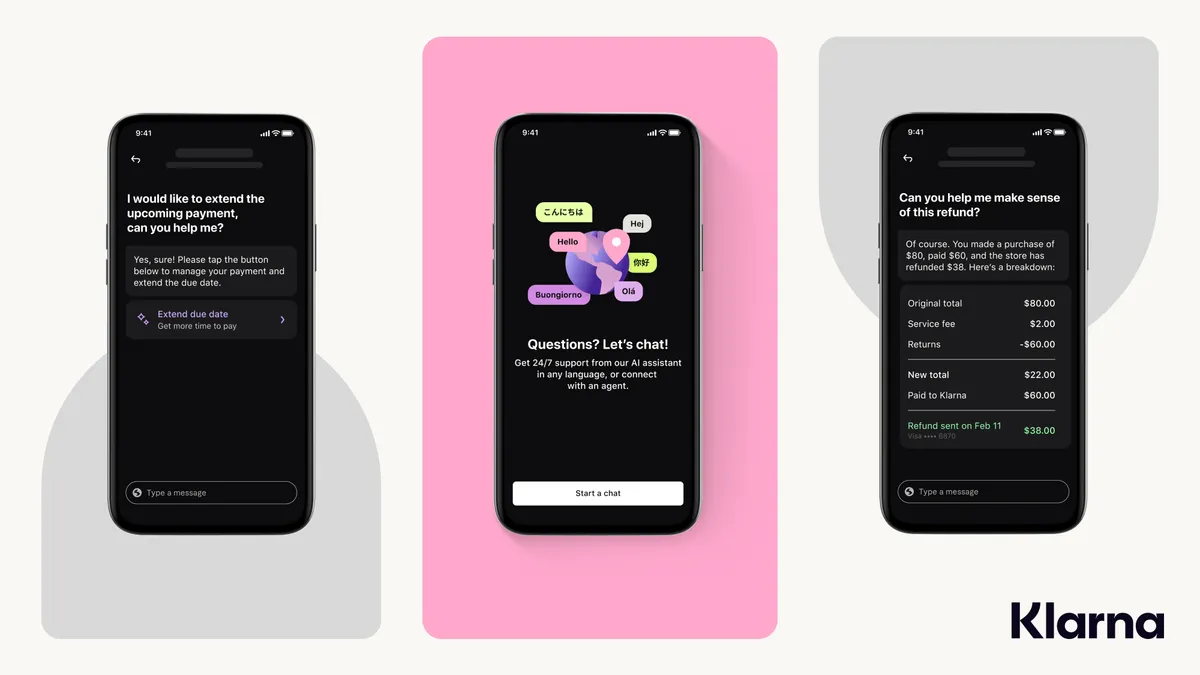

Microsoft, IBM and Google Cloud (in alliance with UKG, well-known in the HR space) announced separate automated tools that can perform worker tasks, such as creating job posts and listings, identifying and contacting potential candidates, managing employee requests, and generating learning programs for individual employees, among others. Many of the new additions complement AI programs that already exist within their platforms or provide easy access to such tools through a single user interface.

Since ChatGPT’s viral rise around the beginning of the year, employers have grappled with how their companies could capitalize on AI. Language-learning models like ChatGPT can take over various mundane tasks, like sending emails, sorting through applications or starting job descriptions, experts previously told HR Dive.

But candidates may also detect over-reliance on robots; experts noted that a personalized touch remains important in recruiting, even as automation eases some tasks. And when companies seek to adopt AI, they should do so in consultation with employees regarding what they actually need from automation, according to a Bain & Co. report from March. Workers likely know best which of their tasks could be automated, the report said.

The federal government has also taken interest in how employers manage AI. The White House released May 3 a Request for Information regarding how automated tools “monitor, manage, and evaluate” workers. Not long before that, leaders from four federal agencies issued a joint statement outlining how U.S. laws and regulations apply to automation tech — with an emphasis on the potential pitfalls employers could fall into.

“Although many of these tools offer the promise of advancement, their use also has the potential to perpetuate unlawful bias, automate unlawful discrimination and produce other harmful outcomes,” the statement said.

This “united federal intent,” according to experts, reflects the growing ubiquity of the technology — and why employers should keenly understand how and why they are using such tools to avoid common traps, like accidentally enforcing old biases.