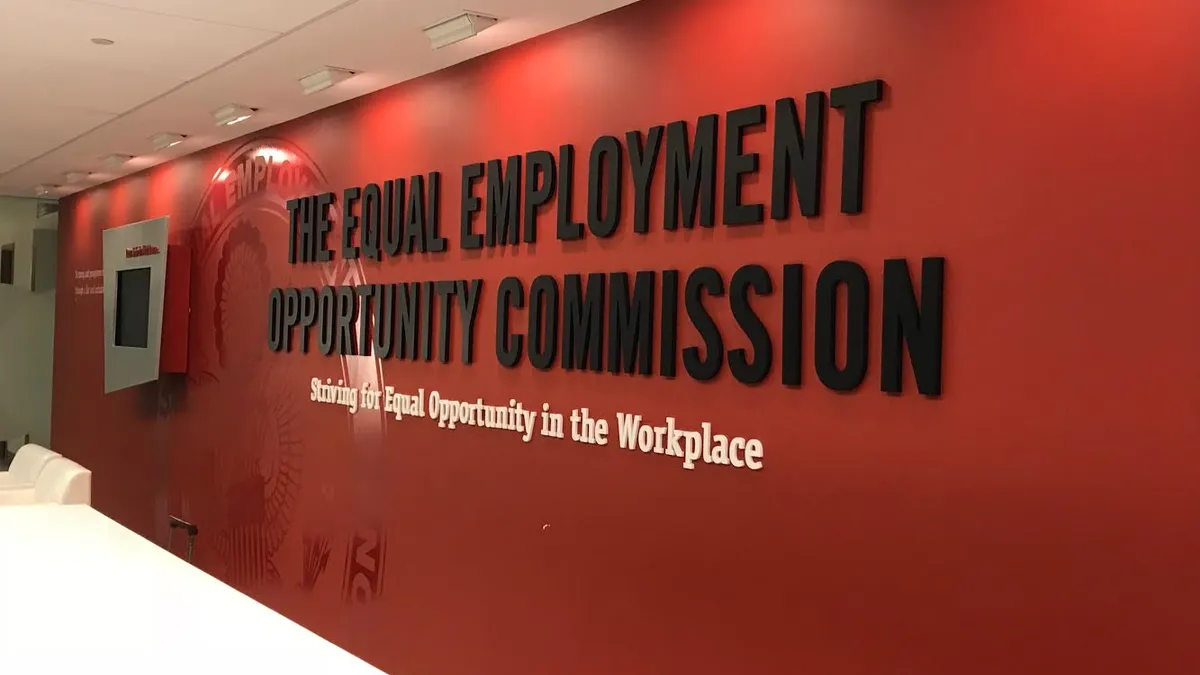

As AI increasingly plays a role in hiring and firing decisions, the U.S. Equal Employment Opportunity Commission is trying to make sure the technology doesn’t engender discrimination.

EEOC Vice Chair Jocelyn Samuels said the commission’s goal is to ensure “we can enjoy the benefits of new technology while protecting the fundamental civil rights that are enshrined in our laws.”

On Jan. 31, the EEOC heard from computer scientists, civil rights advocates, legal experts, employer representatives and an industrial-organizational psychologist during a public hearing on employment discrimination in AI attended online by about 2,950 people.

“The rapid adoption of AI and other systems has truly opened a new frontier,” Chair Charlotte Burrows said.

While AI technology can revolutionize work and improve efficiency, it’s important to make sure it doesn’t perpetuate historical discrimination, Samuels said. Title VII of the Civil Rights Act already covers this arena, and Commissioner Andrea Lucas said she hopes it can be applied successfully.

New York City has led the charge in codifying into law restrictions on how companies can use automated employment decision tools. Effective Jan. 1, New York City started requiring a bias audit before any tool can be used and a notification to job applicants and employees before its use. Enforcement of the law, however, has been delayed until April 15.

Even if a company isn’t screening for protected categories like race, gender or sexual orientation, it could be using proxies for discriminatory preferences, said Pauline Kim, a professor of law at Washington University School of Law in St. Louis.

For example, a system that uses criminal records and credit history for background checks could be discriminating against people who are Native American, Black or other people of color, respectively, because of racial profiling and the history of redlining, said ReNika Moore, director of the American Civil Liberties Union’s racial justice program. Zip codes or college education can be used as proxies for race, Moore said.

“Automated hiring programs are initially advertised as a way to clone your best worker — a slogan that, in effect, replicates bias” said Ifeoma Ajunwa, an associate professor of law at the University of North Carolina School of Law.

If a system were using someone named Jared who plays lacrosse as a model employee, the name itself could encourage it to prioritize White and male workers because Social Security records show that the name Jared is highly correlated with those groups, Ajunwa said. Lacrosse is typically associated with high schools in affluent White neighborhoods because of the history of racial segregation in the United States, Ajunwa said.

Ajunwa recommended that employers use variables that are highly predictive of or highly correlated to successful job performance.

Gary Friedman, a management-side lawyer and a senior partner in the employment litigation practice group at Weil, Gotshal & Manges LLP, said employers are in favor of disclosure about using AI in the hiring process, but that the New York City law is an example of something that could put companies at risk and will disincentivize them from using the technology effectively.