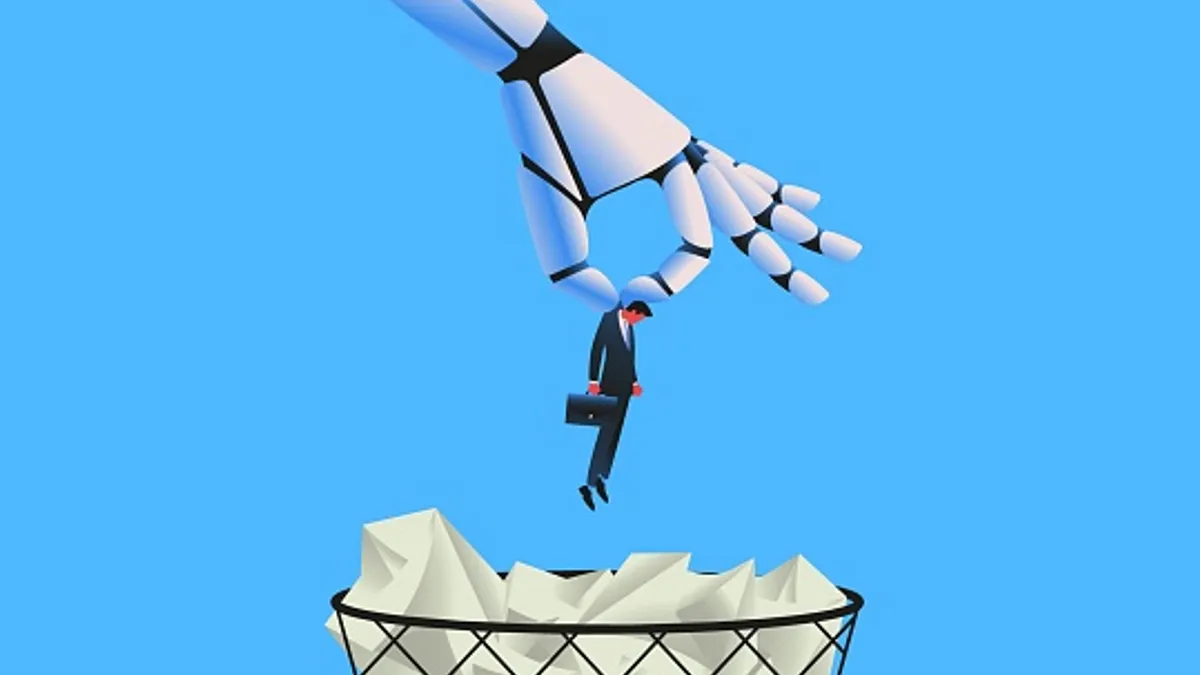

More than 70% of organizations are struggling to keep up with the risks of using artificial intelligence (AI) tools — and should consider using Responsible AI (RAI) programs to stay on pace with the latest advances, according to a June 20 report from MIT Sloan Management Review and Boston Consulting Group.

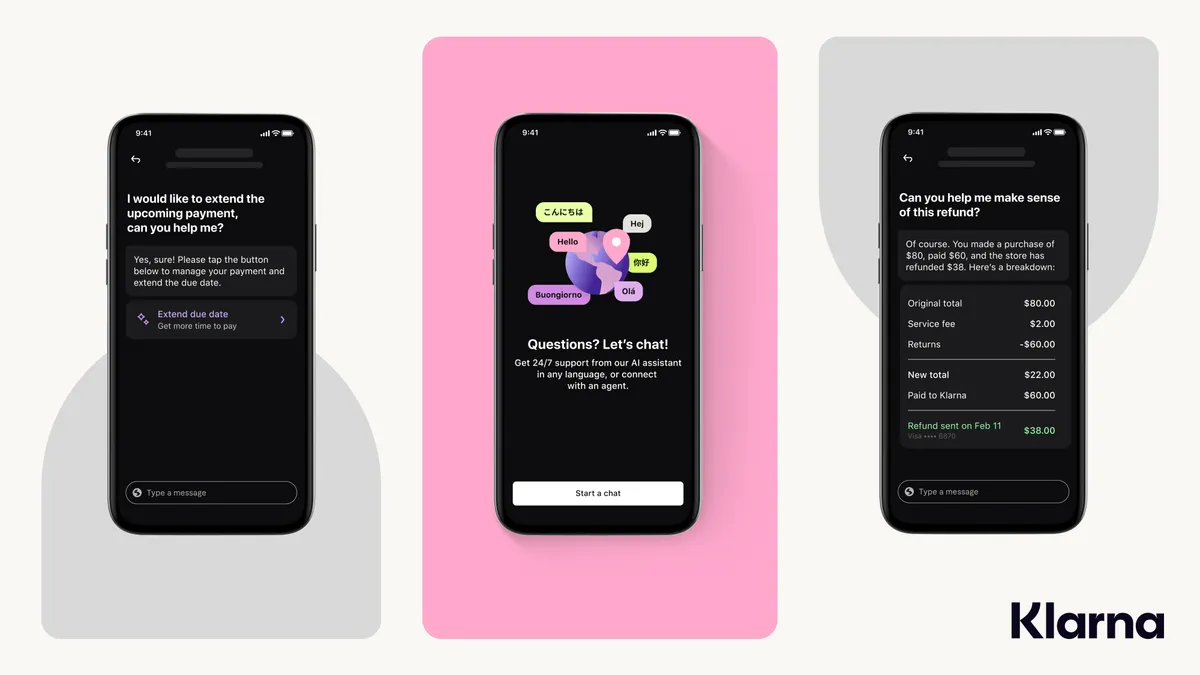

Significant risks have emerged, particularly with third-party AI tools, which make up 55% of all AI-related failures. This could lead to financial loss, reputational damage, the loss of customer trust, regulatory penalties, compliance challenges, litigation and more.

“The AI landscape, both from a technological and regulatory perspective, has changed so dramatically since we published our report last year,” Elizabeth Renieris, one of the report co-authors and guest editor of MIT Sloan Management Review, said in a statement.

“In fact, with the sudden and rapid adoption of generative AI tools, AI has become dinner table conversation,” she said. “And yet, many of the fundamentals remain the same. This year, our research reaffirms the urgent need for organizations to be responsible by investing in and scaling their RAI programs to address growing uses and risks of AI.”

The report includes a global survey of 1,240 respondents who represent organizations with at least $100 million in annual revenues from 59 industries and 87 countries. A broad majority of organizations — 78% — said they are highly reliant on third-party AI, and 53% rely exclusively on third-party tools. This exposes organizations to a host of risks, including some that leaders may not even be aware of or understand.

In fact, a fifth of the organizations that use third-party AI tools fail to evaluate their risks at all. Instead, the report authors noted, companies need to properly evaluate third-party tools, prepare for emerging regulations, engage CEOs in RAI efforts and move quickly to mature RAI programs.

For instance, the most well-prepared organizations use a variety of approaches to evaluate third-party tools and mitigate risk. Companies that deployed seven different methods were more than twice as likely to uncover lapses as those that only used three (51% versus 24%). These methods included vendor pre-certification and audits, internal product-level reviews, contractual language that mandates RAI principles and adherence to AI-related regulatory requirements and industry standards.

CEO engagement in RAI conversations also appears to be key, the report found. Organizations with a CEO who takes a “hands-on role” — by engaging in RAI-related hiring decisions, product-level discussions or performance targets — reported 58% more business benefits than organizations with a less hands-on CEO.

“Now is the time for organizations to double down and invest in a robust RAI program,” Steven Mills, one of the report co-authors and chief AI ethics officer at BCG, said in the statement.

“While it may feel as though the technology is outpacing your RAI program’s capabilities, the solution is to increase your commitment to RAI, not pull back,” he said. “Organizations need to put leadership and resources behind their efforts to deliver business value and manage the risks.”

As AI risks become more apparent, state and federal officials are considering regulations to track and monitor the use of automated tools in the workplace. The White House has announced plans to evaluate technology used to “surveil, monitor, evaluate and manage” workers. In addition, there are more than 160 bills or regulations pending in 34 state legislatures related to AI.

For the U.S. Equal Employment Opportunity Commission (EEOC), employment discrimination is a key risk to consider, especially when using AI-based platforms involved in hiring and firing decisions. As part of this, cities and states are considering legislation that could regulate automated employment decision tools and inform job seekers of their use.