By: Emilie Shumway

• Published June 2, 2025

There’s a video on TikTok that has, as of early June, been liked nearly 1 million times. In it, a man in a suit and tie smiles and raises his eyebrows in excitement at the camera. A caption over the video sets the viewer up: He’s landed an interview for his dream job, and then “this happens.”

The video cuts to a virtual interview starting on his laptop. “Hello,” he says.

“Hi,” a stilted female voice says. “Thank you so much for joining the interview today.”

The man’s brows furrow. He’s talking to a robot.

“For our first question, let’s circle back. Tell me about a time when — when when when when — let’s circle back. Tell me about a time when—,” the bot says, beginning a glitching question loop that gives the man no opportunity to respond or even know what sort of story he’s supposed to be telling. On the screen is a still photo of a woman in a chambray shirt smiling in front of a house.

Finally, after a cut, the bot wraps up: “Thank you so much for answering the questions. I got a lot of great information—”

“I didn’t get to answer a question,” the man says in confusion.

Taking the human out of HR

The man is Leo Humphries, a Houston-area student who recently acquired his Bachelor’s degree with a focus on radio and television broadcasting. The job was for a news reporter role at a local broadcast station, he said.

He happened to record the now-viral moment because he has a friend in HR and wanted to send the interview to her afterward for feedback on his skills, he told HR Dive. Instead, he seemed to capture the zeitgeist.

“What shocked me was how many people were in the comments saying, ‘This happened to me,’” Humphries said.

Some of the most-liked comments on the video indeed provide insight into how job seekers and people more broadly are feeling about the intrusion of artificial intelligence into parts of the recruitment process that normally belong to HR pros or hiring managers: “It’s so disrespectful to the applicants”; “Taking the HUMANS out of Human Resources is the worst corporate move ever”; and “If they don’t have the decency to interview you face to face, they aren’t worth your time.”

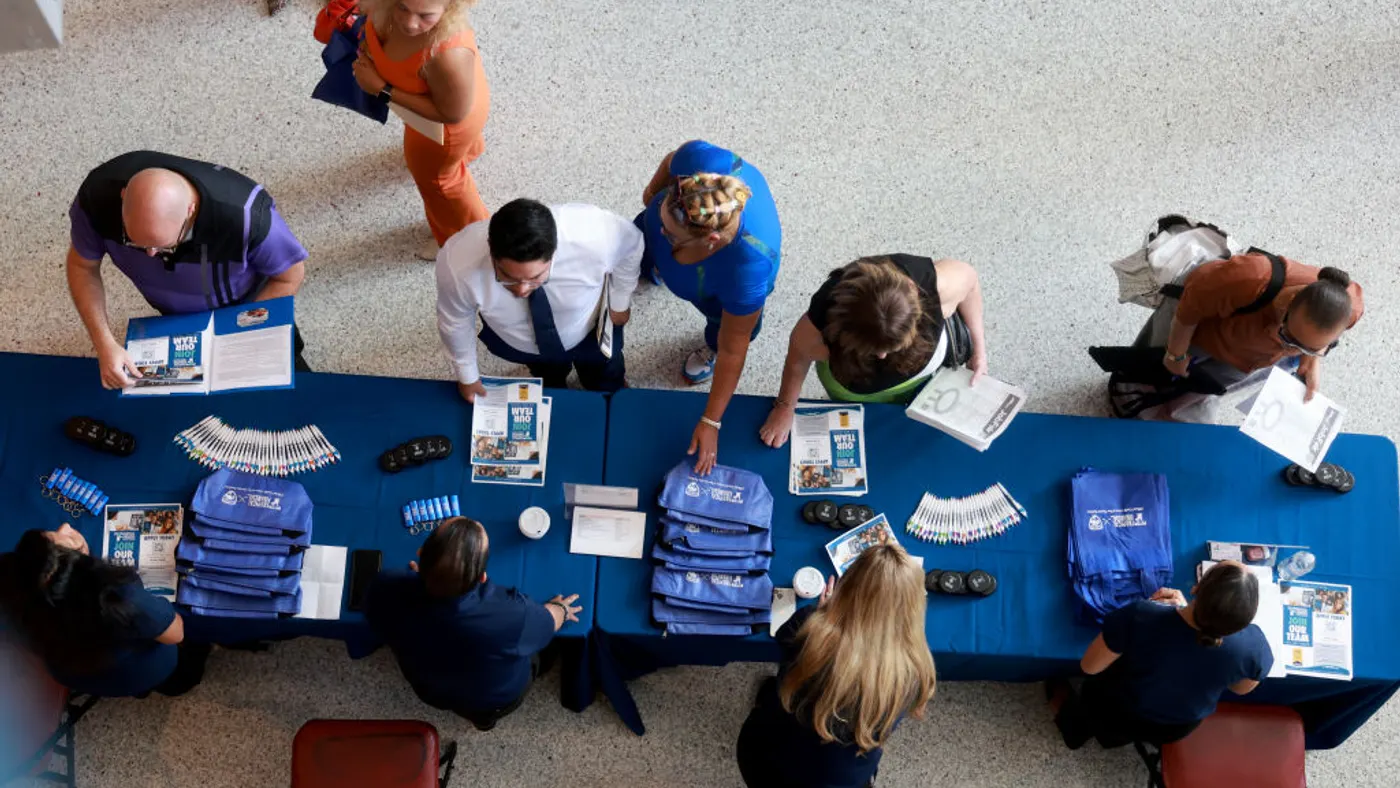

The interview chatbot phenomenon is just the latest AI workplace integration causing a backlash among workers and customers alike. Duolingo, a company widely loved for its fun social media presence and cute owl mascot, prompted a massive backlash when its CEO announced an “AI-first” strategy in April, for example. The backlash was so intense the brand deleted all its TikTok and Instagram posts.

As the glitching bot and “AI-first” backlashes demonstrate, employers may not fully understand the implications of embracing AI in its current form. An Orgvue survey of employers conducted recently found more than half of those who laid off workers with the intention of replacing them with AI regretted it. Further, a quarter of leaders said they didn’t know which roles would most benefit from AI.

The ‘wild west mentality’

Employers may fear being left behind in the AI integration boom, but the uncertainty about how to use it has fomented a “wild west mentality,” Brian Smith, an organizational psychologist and founder of IA Business Advisors, told HR Dive.

A lot of people have bought into AI and “just gone all in,” Smith said, using the tech to replace nearly any type of work they can think of. But he’s heard from employers that are fielding customer frustrations as they’re being forced to interact with bots, which lack the ability to be emotionally contextual. “It’s not empathetic to the human interaction,” he said. “It’s like speaking to Ferris Bueller’s teacher … a lot of people are hitting the ‘give me a human’ button.”

The use of AI interviewers goes even further, Smith said. “This is replacing a human to have human interaction, and I think that’s almost unethical — and it is disrespectful.” Smith hedged with “almost” because “there’s a huge discussion on what is the ethical use of AI and what is not,” he said. “And I think we’re getting really close to that boundary when we start having companies pretend that [their] candidates are speaking to a human when [they’re] not.”

Humphries told HR Dive he’d had no warning the interview would be done by AI, and he’d previously spoken to a real person at the company to arrange the interview. He shared an email from the company’s “Human Resources Department” that confirmed the interview time with a link for the meeting. “I’m looking forward to connecting with you again for your video interview,” the email said.

The process might seem a bit backwards — given interview scheduling is a more commonly embraced use of AI, while AI interviews seem to be a newer role for the tech — but the company’s head-scratching approach may underscore Smith’s wild west theory.

A (sort of) happy ending

Humphries found the experience demoralizing, he told HR Dive. “It scares me for people fresh out of college,” he said, with companies “removing so much of the humanness” to the recruitment process.

In an update posted a couple weeks after his initial video, Humphries explained that the company saw the video and reached out to him to apologize.

“They let me know that they were trying out this new software that this company had promised them would help them with processing applicants … and they just didn’t know that this was happening,” he said. The company said it was reviewing and addressing the issues, he said, although it was unclear whether they intended to continue using AI for interviewing.

Humphries also touched on the problem of AI applicants that employers face when hiring. “I think that AI’s just confusing a lot of people,” he said.

While recent research showed AI in the hiring process might be a deal-breaker for many applicants, Humphries told HR Dive he’d still consider taking a job with the company if they offered him another interview. “With a human being, 100%,” he said.

Article top image credit: Retrieved from Leo Humphries on May 30, 2025